How Google’s MedGemma and MedSigLIP Are Redefining AI in Healthcare

How Google’s MedGemma and MedSigLIP Are Redefining AI in Healthcare

In the rapidly evolving intersection of artificial intelligence and medicine, Google Research and DeepMind have introduced two breakthrough models "MedGemma and MedSigLIP" that could transform how AI is applied in clinical environments. These models offer a powerful mix of precision, adaptability, and efficiency in interpreting both medical text and images. But beyond the tech specs, what do they really mean for doctors, researchers, and patients?

Let’s break it all down.

Why AI in Healthcare Is So Challenging

Healthcare isn’t just another industry AI can breeze through. Medical data is complex, vast, and sensitive. From dense clinical notes to intricate radiology scans, the diversity of information and the strict privacy demands create a high-stakes environment. General-purpose AI models often fall short in this domain. They might be brilliant at processing language or general visuals, but medicine requires a deeper, more specialized understanding.

This is where Google’s new models step in.

Introducing MedGemma and MedSigLIP

Google’s Health AI Developer Foundations Collection is a suite of tools designed to power a new generation of medical applications. At its core are two key models:

MedGemma is a family of vision-language models built on the powerful "Gemma 3 architecture", tuned specifically for medicine.

MedSigLIP is a medical image encoder designed to extract deep insights from complex medical visuals across multiple domains.

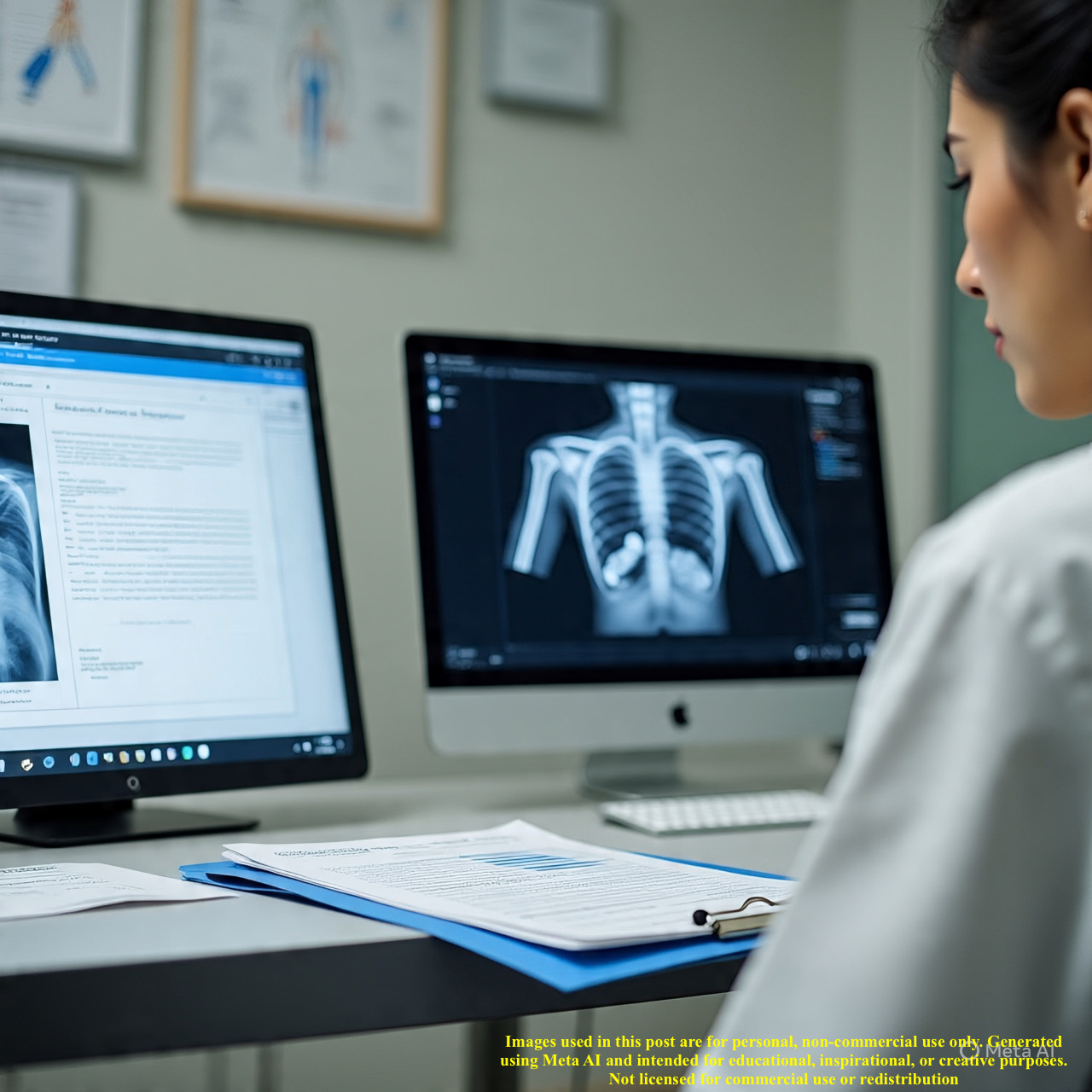

Think of MedGemma as a generalist that’s been to medical school, with both broad reasoning skills and deep clinical knowledge. It can handle text, images, or both, making it versatile in how it processes information. Meanwhile, MedSigLIP acts as the model’s visual system, expertly interpreting medical imagery from chest X-rays to skin lesions.

How Were These Models Trained?

The training strategy behind these models is what makes them so robust. Rather than just dumping in raw medical data, the development team used a layered approach:

1. Start with General Intelligence

They began with the Gemma 3 foundation, ensuring the models retained general reasoning abilities.

2. Add Rich Medical Data

They then integrated a wide range of medical-specific datasets:

Textual Data: MedQA, PubMedQA, and over 200,000 "AI-generated medical questions".

Visual Data: CT and MRI slices, retinal and dermatology images, and histopathology patches. In total, millions of image-text pairs were used.

3. Smart Preprocessing

Images were upscaled to 896×896 pixels to ensure no clinical details were missed. CT scans were enhanced by color-channel manipulation to better highlight different tissue types.

4. Three-Stage Training

Vision Encoder Tuning using 33+ million image-text pairs.

Multimodal Decoder Pre-training to align vision and text understanding.

Post-training with Reinforcement Learning and Distillation, boosting adaptability and performance on new tasks.

The Results: Performance That Surprises

The big question with any AI model is: Does it actually work in the real world?

The short answer for MedGemma and MedSigLIP is; yes, and then some.

Outperforming Human Physicians

On the Agent Clinic MedQA benchmark, "MedGemma 27B" scored 56.2%, beating the average 54.0% achieved by human physicians. This doesn’t mean AI is ready to replace doctors, but it does show real promise as a diagnostic assistant.

Better Than Bigger Models

MedGemma outperformed much larger proprietary models in both text-based and visual tasks. For instance, its "CXR report generation" was so effective that "81% of AI-generated reports led to clinical decisions that were equal or better" than those based on human-written reports.

High Efficiency at Low Cost

MedGemma 4B is "up to 500 times cheaper" to run than some of the largest competing models. That makes it viable for use in low-resource clinics, smaller hospitals, and research labs.

MedSigLIP: The Visual Powerhouse

MedSigLIP is no slouch either. Despite operating at a lower resolution (448×448), it:

Outperformed a dedicated chest X-ray model by 2% in zero-shot classification.

Boosted fracture classification accuracy by over 7%.

Beat a specialized dermatology model in skin condition identification.

It’s a single visual model that can handle diverse domains - pathology, ophthalmology, dermatology - all with impressive results.

Practical Use Cases in the Real World

These models aren't just academic showpieces. Here’s how they could revolutionize actual healthcare workflows:

Medical Image Retrieval - Instantly find similar past cases to aid diagnosis or research.

Clinical Report Generation - Draft detailed, accurate reports faster, a huge help for radiologists.

EHR Information Extraction - Quickly pull relevant details from massive patient records.

Clinical Trial Matching - Identify eligible patients using model-assisted record analysis.

Training and Education - Build AI-powered teaching tools that walk students through medical scenarios.

Key Advantages of Openness

One of the most exciting aspects? Google is open-sourcing these models.

This level of transparency is rare in healthcare AI. It means developers, hospitals, and researchers can:

Audit the models for bias or safety.

Fine-tune them for local needs.

Build on them for new tools and platforms.

This could drastically accelerate innovation and ensure responsible deployment in real-world settings.

But It’s Not All Perfect

The creators of these models are upfront: "automated benchmarks aren't enough". Real-world clinical validation is still essential. Some benchmarks may be nearing saturation, meaning models score high, but the scores don’t reflect practical usefulness.

We need better, tougher benchmarks to truly gauge real-world performance - especially when patient safety is on the line.

Final Thoughts

MedGemma and MedSigLIP mark a turning point in medical AI. They combine:

High performance

Flexibility

Efficiency

And "open access"

That’s a rare combo.

They’re not about replacing doctors. They’re about amplifying their abilities, cutting down on administrative burden, and expanding access to high-quality tools, even in resource-limited settings.

Now, the challenge and the opportunity is in how the developer community builds on this foundation to deliver ethical, validated, and truly impactful healthcare AI.

FAQs About MedGemma and MedSigLIP

1. What is MedGemma?

MedGemma is a family of vision-language AI models developed by Google, designed to interpret and reason with both medical text and images. It’s based on the Gemma 3 architecture and optimized for clinical use.

2. How is MedSigLIP different from MedGemma?

MedSigLIP is a dedicated medical image encoder. It specializes in analyzing visual data like X-rays, skin images, and histopathology, complementing MedGemma’s broader vision-language capabilities.

3. Are these models open-source?

Yes. Google is releasing MedGemma and MedSigLIP openly, allowing developers and researchers to fine-tune, evaluate, and build upon them for diverse medical applications.

4. Can these models replace doctors?

No. They’re designed to "support" medical professionals, not replace them. Their value lies in "augmenting" clinical decision-making, not automating it entirely.

5. What makes these models unique in healthcare AI?

Their combination of strong general knowledge, deep medical understanding, efficient performance, and open access sets them apart. They’re also capable of delivering high accuracy while requiring far less computational power than many alternatives.